Since I got my Mac, I've been trying to learn Objective-C, Cocoa, Cocoa Touch, Xcode, and the rest of the iPhone development stack. As I spend more and more time on this, I've noticed some parallels between learning a new programming language and learning a new spoken language.

A few years back I moved to Central America and, among other things, learned Spanish. Learning Spanish was a long, slow, difficult process. At one point in the process (maybe a few months in) I realized that Spanish is not just a literal translation of English. That is, you can't just translate the words from English to Spanish. You also can't just translate the grammar from English to Spanish.

Aside from the basic spelling and grammar rules, languages are made up of many things such as styles, idioms, phrases, mannerisms, local accents, dialects, and more. Because of this, the trick to learning a new language (once you already have a basic understanding of the syntax and grammar) is to try and think in the new language. You want to try and make the dialects and colloquialisms your own. You don't want to just translate directly as your speaking as this usually leads to pidgin. You want to fluently express yourself in this new language and be understood as well as possible.

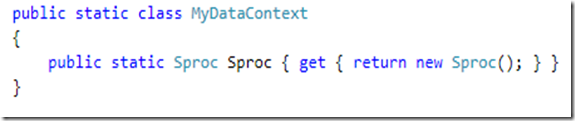

Translating this to learning a new programming language, you can see that just knowing the syntax and APIs of the new language is not enough. When writing code in a new language you want to own that new language. You want the code to look like it was written by someone fluent and familiar with the language. This will greatly improve the quality and maintainability of the code.

This means that when you learn a new programming language, you should learn the coding standards as well. So when you write code in C#, it should look like C# and should not use hungarian notation. And when you write code in Objective-C, it should use the Objective-C styles and it shouldn't look like .NET or anything else. It can be difficult to adjust to different code/language styles but you haven't really learned the new language until you've done so.